二进制

mnist_number_recognition/MNIST_data/t10k-images-idx3-ubyte.gz

二进制

mnist_number_recognition/MNIST_data/t10k-labels-idx1-ubyte.gz

二进制

mnist_number_recognition/MNIST_data/train-images-idx3-ubyte.gz

二进制

mnist_number_recognition/MNIST_data/train-labels-idx1-ubyte.gz

二进制

mnist_number_recognition/images/mnist_accuracy_evaluation.png

文件差异内容过多而无法显示

+ 11342

- 0

mnist_number_recognition/model.ckpt.meda.json

二进制

mnist_restructured/MNIST_data/t10k-images-idx3-ubyte.gz

二进制

mnist_restructured/MNIST_data/t10k-labels-idx1-ubyte.gz

二进制

mnist_restructured/MNIST_data/train-images-idx3-ubyte.gz

二进制

mnist_restructured/MNIST_data/train-labels-idx1-ubyte.gz

二进制

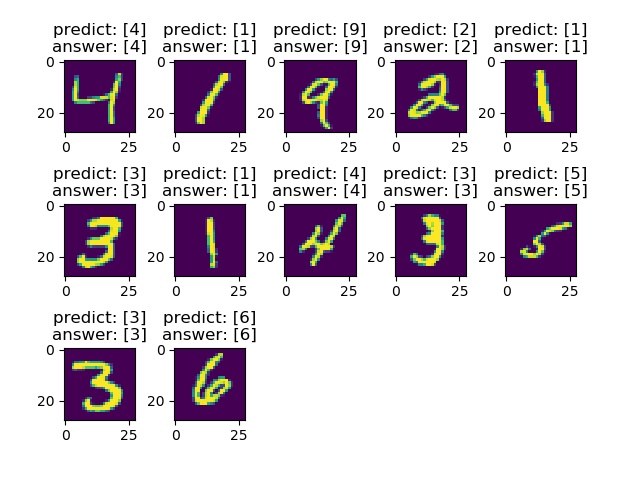

mnist_restructured/images/mnist_result_evaluation.jpg

+ 106

- 0

mnist_restructured/mnist_eval.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 33

- 0

mnist_restructured/mnist_inference.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 81

- 0

mnist_restructured/mnist_train.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 6

- 0

mnist_restructured/model/checkpoint

|

||

|

||

|

||

|

||

|

||

|

||

|

||

二进制

mnist_restructured/model/model.ckpt-37501.data-00000-of-00001

二进制

mnist_restructured/model/model.ckpt-37501.index

二进制

mnist_restructured/model/model.ckpt-37501.meta

二进制

mnist_restructured/model/model.ckpt-40001.data-00000-of-00001

二进制

mnist_restructured/model/model.ckpt-40001.index

二进制

mnist_restructured/model/model.ckpt-40001.meta

二进制

mnist_restructured/model/model.ckpt-42501.data-00000-of-00001

二进制

mnist_restructured/model/model.ckpt-42501.index

二进制

mnist_restructured/model/model.ckpt-42501.meta

二进制

mnist_restructured/model/model.ckpt-45001.data-00000-of-00001

二进制

mnist_restructured/model/model.ckpt-45001.index

二进制

mnist_restructured/model/model.ckpt-45001.meta

二进制

mnist_restructured/model/model.ckpt-46001.data-00000-of-00001

二进制

mnist_restructured/model/model.ckpt-46001.index

二进制

mnist_restructured/model/model.ckpt-46001.meta

二进制

mnist_restructured/model/model.ckpt-47001.data-00000-of-00001

二进制

mnist_restructured/model/model.ckpt-47001.index

二进制

mnist_restructured/model/model.ckpt-47001.meta

二进制

mnist_restructured/model/model.ckpt-47501.data-00000-of-00001

二进制

mnist_restructured/model/model.ckpt-47501.index

二进制

mnist_restructured/model/model.ckpt-47501.meta

二进制

mnist_restructured/model/model.ckpt-48001.data-00000-of-00001

二进制

mnist_restructured/model/model.ckpt-48001.index

二进制

mnist_restructured/model/model.ckpt-48001.meta

二进制

mnist_restructured/model/model.ckpt-49001.data-00000-of-00001

二进制

mnist_restructured/model/model.ckpt-49001.index

二进制

mnist_restructured/model/model.ckpt-49001.meta

+ 3

- 0

tests/forward_propagation.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||